Fairness Indicators is designed to support teams in evaluating, improving, and comparing models for fairness concerns in partnership with the broader Tensorflow toolkit.

The tool is currently actively used internally by many of our products, and is now available in BETA for you to try for your own use cases. We would love to partner with you to understand where Fairness Indicators is most useful, and where added functionality would be valuable. Please reach out at tfx@tensorflow.org. You can provide any feedback on your experience, and feature requests, here.

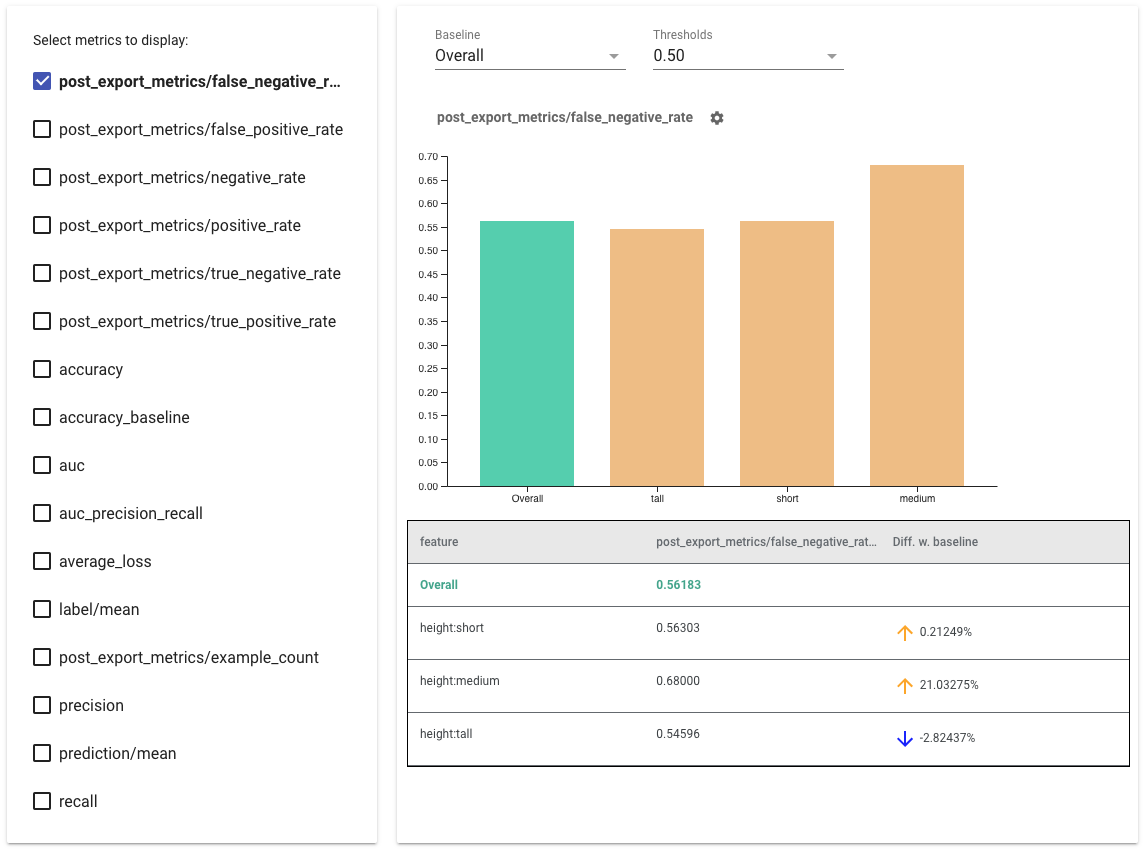

Fairness Indicators enables easy computation of commonly-identified fairness metrics for binary and multiclass classifiers.

Many existing tools for evaluating fairness concerns don’t work well on large-scale datasets and models. At Google, it is important for us to have tools that can work on billion-user systems. Fairness Indicators will allow you to evaluate fairenss metrics across any size of use case.

In particular, Fairness Indicators includes the ability to:

This case study, complete with videos and programming exercises, demonstrates how Fairness Indicators can be used on one of your own products to evaluate fairness concerns over time.

The pip package download includes:

Tensorflow Models

Not using existing Tensorflow tools? No worries!

Non-Tensorflow Models

The examples directory contains several examples.

For more information on how to think about fairness evaluation in the context of your use case, see this link.

If you have found a bug in Fairness Indicators, please file a GitHub issue with as much supporting information as you can provide.