Python

Yolo(You Only Look Once)[0] is a well-known real-time detection model for object detection. Unlike RPN, R-CNN, fast R-CNN.. use region proposal network to extract thousands of region to do classification, Yolo "only look once". The grid cell and the boudingbox regressor allow yolo to perform the object classification and the object detection simultaneously.

Yolo v2[1] mainly improve with three aspects.

Yolo v3[2] improve with two major aspects.

Residual Neural Network [3] is also very popular network use for image feature extraction problem, the residual block let the network avoid the gradient vanishing problems and make losses smoother[4]. Gated recurrent units [5] (GRUs) are a gating mechanism in recurrent neural networks, introduced in 2014 by Kyunghyun Cho et al. It's very similiar to LSTM, but GRUs are more efficient there're a nice comment by Abhishek Jaiswal.

There're some awesome websites to help you understand. [Lecture] Evolution: from vanilla RNN to GRU & LSTMs by Supervise.ly.

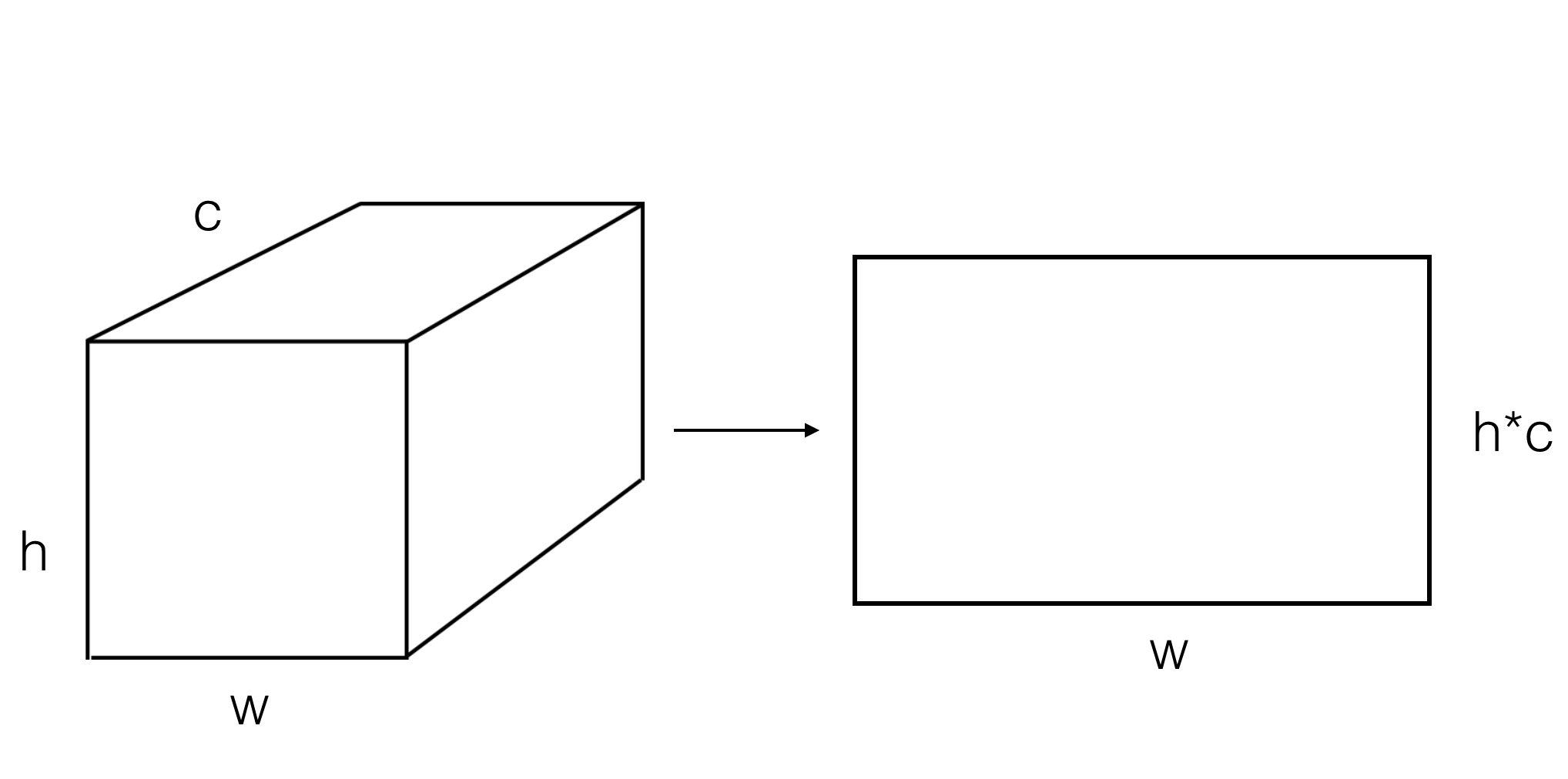

After the CNN feature extractor, I reshape the feature map from [height,width,channel] to [width, height*channel]. I got [32,16,256] in the output of the resnet18 model. After reshaping into [32, 16*256], I connect a fully-connected layer to reduce the dimension to [32,64] features and input into the GRU rnn model, and finally a Softmax out layer for onehot encode output as a string.

Because of the Variation of the label length and the maxmium label length, I padding all of the length labels to be 7. (7 is the maximum length of Taiwan plate)

ABC123 -> ABC123_

DE2345 -> DE2345_ I use ctc loss to train this model and discard the first two outputs which seem as junks so input length will be 30 instead. Last, Using greedy Algorithm to minimize the input length 30 into a string. In addition, don't forget to discard the _ char.

A_C__DD__1__22__44__5 -> ACD245

a ctc demo website to understand more.

yolov2 and later use anchor boxes instead of grid cell, but we need to initial some nice anchor boxes to improve the training process, so we need to run k-means on the boundingbox of our dataset.

$ cd kmeans/

$ python run_kmeans.py

// write result in kmeans/k_means_anchor file.

// like this:

// Accuracy: 89.91%

// anchors = 69,25, 78,33, 71,29, 44,19, 75,37, 47,23, 58,27, 91,42, 55,22

// and paste into yolov3.cfg.labelimg format is not suitable for darknet, so we need to write a convert program to fix the issue.

//run img_lbl_split.py to split xml and imgs.

$ python img_lbl_split.py

// from

// path_data/[xmls&imgs]

// to

// path_to_data/images/plate/[imgs]

// path_to_data/labels/plate/[xmls]//run the convert script $ python label_to_yolo.py / the formula x = (xmin + (xmax-xmin)/2) 1.0 / image_w y = (ymin + (ymax-ymin)/2) 1.0 / image_h w = (xmax-xmin) 1.0 / image_w h = (ymax-ymin) 1.0 / image_h /

* #### Size Issue

The original image width and height is 608x608, but get 320x240 in our dataset. There will be a upsample error cause by 240. Yolov3 downsample /2 5times, so the 240/2<sup>5</sup> = 7.5 but get 8 instead. so the upsample 8\*2 = 16 can't concentrate with 15(240/2<sup>4</sup>) by residual block.

* #### Solution

Therefore, I modify Yolov3, I call `yolov3_1_cls.cfg` in darknet/cfg/. I remove one 2-strides(downsample) conv layers and add more conv layers, and also use my custom anchor boxes that I calculated in [kmeans](#kmeans).

<center>Yolov3 Architecture Modified Yolov3 Architecture </center>

<div style="text-align:center">

<img src ="https://github.com/hsuRush/DeepANPR/blob/master/demo/yolov3_structure.png?raw=true"height="540" title="Yolov3 structure" />

<img src ="https://github.com/hsuRush/DeepANPR/blob/master/demo/my_yolo_struct.png?raw=true"height="540" title="modified Yolov3 structure" />

</div>

### ResNet18+GRU

* Size Issue

I use ResNet18 as the image feature extractor and set input image width height as 128x64. Thus, I modify some conv layers and remove Maxpooling layers due to the image size of the plate(224x224 in the original paper).

* CNN Architecture

the original residual block in resnet18:

<div align="center"><img src ="https://github.com/hsuRush/DeepANPR/blob/master/demo/resnet_struct.png?raw=true"height="360" title="ResNet structure" /></div>

I increase the conv layers by changing the residual block from `[2,2,2,2]` to `[2,4,4,2]` and minize the filters=32,64,128,256. Last, I remove the 7x7 /2 conv layers and Maxpooling layers and add 5x5 conv instead.

[EDIT] I use [2,2,2,2] and filters=64,128,256,512 get 98.86% performance.(best on kaggle PLB)

<p align="center"><img src ="https://github.com/hsuRush/DeepANPR/blob/master/demo/my_resnet_struct.png?raw=true"height="480" title="modified ResNet structure" ></p>

* RNN Architecture

I feed the datas into two GRUs(GRU, GRU_b) with one reverse sequence, then `add`,`batch normalization`. Next I repeat the GRU procedure with replacing `add` to `concatenate`.(GRU1, GRU2)

<p align="center"><img src ="https://github.com/hsuRush/DeepANPR/blob/master/demo/rnn_structure.png?raw=true" height="640" title="rnn structure"></p>

* crop the image by true labels in order to get the plate image and resize to 128x64.

implement in recognition/load_img.py

### Dataset

There're will thousands of labels are not precisely, like AFG1929 ADB2531 and so on...

My two stage methods extremely depend on the ground truth, since the final accuracy is multiplication of two accuracies. the labeled data is **extremely** important for me.

<p align="center"><img src ="https://github.com/hsuRush/DeepANPR/blob/master/demo/bad_label.png?raw=true" width="360" title="loss"></p>

I re-labelled 5098 images.

## Training

create a `train.txt` contains the absolute path to the images.

and need to change the path in `darknet/cfg/plate.data`.

### Yolov3

```console

$ cd darknet/

$ sh train_1_cls.shthe training parameters is setting in the dakrnet/cfg/yolov3_1_cls.cfg.

learning_rate=0.001

batch=64

max_batches = 4700

steps=3800,4100

scales=.1,.1decay in 3800 and 4100 by lr*0.1.

Because of the validation problem on darknet, I train all of the dataset without any split, so I write a code to demo on youtube videos(source), here's a demo below, the output will be lightblue

boundingboxes.

see some config in train.sh, feel free to change it.

//recognition/rain.sh

python train.py \

--model resnet18 \

--experiment_dir ./experiment \

--epoch 40 \

--decay_epoch 20 \

--batch 16 \

--lr 1e-4 \

--valid_split 0.1 Train command

$ cd recognition/

$ sh train.sh

//there are some options in train.sh

//check train.py

I use LearningRateScheduler and perform an exponential decay fomr decay_poch to final epoch.

Because the detection model won't detect perfectly every time, I train the model with some image augmentation so the model will be more robust.

train_datagen = ImageDataGenerator(

width_shift_range=0.1,

height_shift_range=0.2,

shear_range=0.2,

zoom_range=0.1,

rotation_range=30,

fill_mode='nearest',

)Last but not least, I save the model with lowest validation loss by the Keras callback function.

change the cfg/yolov3_1_cls.cfg to

#Testing

batch=1

subdivisions=1

# Training

#batch=64

#subdivisions=16and then run the script to crop test images.

Because I think there are only few background images, I set the threshold to [0.45,0.4,0.35.....0.1] recurrently and discard the image that's detected to ensure there's a detection in each image.

$ python demo_image.py$ cd recognition/

$ python test.pyThere are multiple detections in a image sometimes, so I fusion the result according to the confidence score.

I use ResNet18+GRU with yolov3 and get 98.8% acurracy in Kaggle public leaderboard. I am especially appreciate to Pro.Liao and TAs that deliver a fantastic ML course and Kaggle Competitions.

Code

Papers

[0]. You Only Look Once: Unified, Real-Time Object Detection by Joseph Redmon et al.

[1]. YOLO9000: Better, Faster, Stronger by Joseph Redmon et al.

[2]. YOLOv3: An Incremental Improvement by Joseph Redmon et al.

[3]. Deep Residual Learning for Image Recognition by Kaiming He et al.

[4]. Visualizing the Loss Landscape of Neural Nets by Hao Li et al.

[5]. Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation by Kyunghyun Cho et al.

others

All the results are testing on Kaggle Public Leaderboard.

To run the result I've trained, please overwrite para.py, resnet.py, model.py from the each recognition/experiment folder to recognition/ and change the weight path and select the certain model name in test.py.

No switchaxes in model.py

x = Lambda(lambda x: K.permute_dimensions(x,(0,2,1,3)))(x) # switchaxes from [b,h,w,c] to [b,w,h,c][height,width,channel] to [height, width*channel] and turn out 98.43%, not bad actually.[height,width,channel] to [height, width*channel] and turn out to be 98.43% accuracy.I've spotted that the shape [h,w,c] reshape to [w,h*c] is different from [w,h,c] reshaping to [w,h*c]. the [w,h,c] is the correct method. So there're newer experiments below.

[2,2,2,2] and get 98.0% performance.height_shift_range and shear_range both from 0.1 to 0.2, improve ~0.5% performance.[2,2,2,2] and filter=64 instead of 32, get 98.86% performance(best).(8_experiment folder weight link)para.py, such as the size of the Dense layer (between CNN and RNN), RNN size and change the composition of the residual block.